Dieser Artikel ist auch in deutscher Sprache verfügbar: Zum deutschen Artikel.

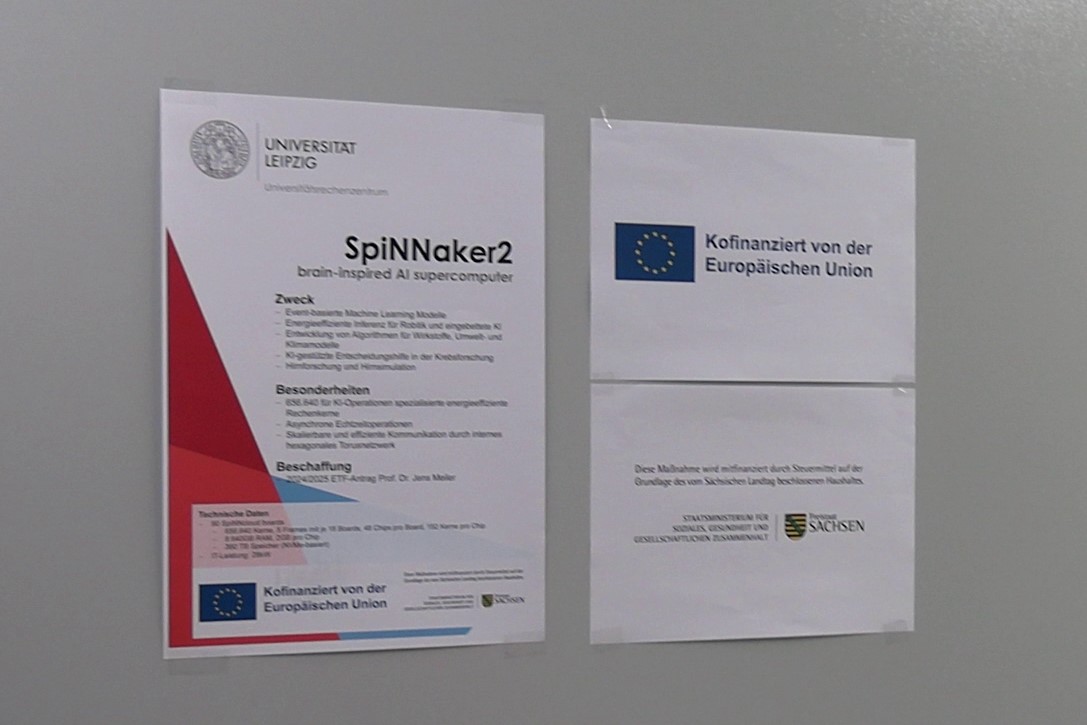

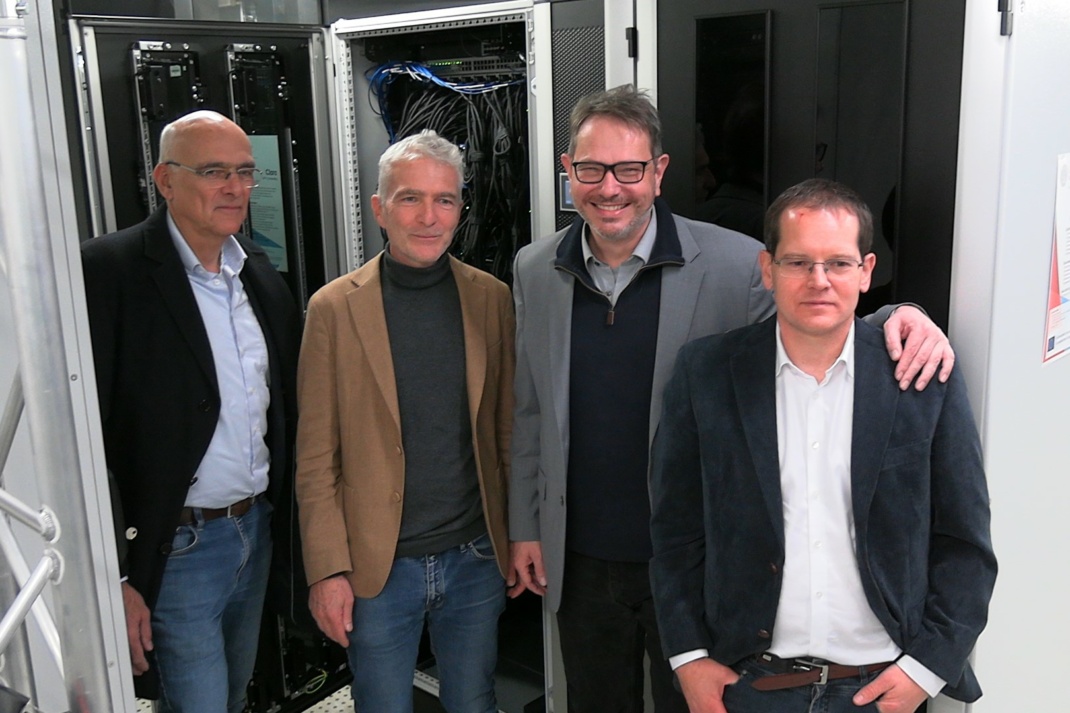

On October 28, Leipzig University unveiled its new, brain-inspired supercomputer to the local press. Before being allowed into the university’s “hallowed halls” to see SpiNNaker2 in action, Prof. Dr. Jens-Karl Eilers (Vice-Rector for Research at Leipzig University), Dieter Lehmann (Director of the University Computing Center), Prof. Dr. Jens Meiler (Director of the Institute for Drug Discovery), and Prof. Dr. Christian Mayr of TU Dresden, who is also the founder of the chip start-up Spinncloud, provided introductory remarks and answered questions.

Additional information can be found in Leipzig University’s official press release.

What Does “Brain-Inspired” Actually Mean?

Prof. Mayr explained: “Brain inspiration happens on a rather abstract level. One can imagine that in a computation, for example, we would prefer not to constantly retrieve bits from distant locations and then process them elsewhere. That requires significant time and energy.

So a brain-inspired concept really focuses on co-location: computation, memory, and communication are kept as close together as possible, and processes are distributed across the entire system. This principle is also relevant to drug discovery, as it provides greater memory bandwidth.

By distributing memory, we use many small memory units instead of one large unit, which would become a bottleneck and slow everything down.”

I followed up: “If I understand correctly, the similarity to the brain lies in the fact that the CPU and memory are located together, minimizing data movement, so that data is processed where it’s stored? Put simply.”

Prof. Mayr: “Exactly. Unlike other approaches that attempt to emulate individual neurons or synapses, we did not pursue that. There is still too much we do not understand. At present, from an AI perspective, there is no training algorithm capable of training such detailed computational neuroscience models as effectively as current AI models.

Therefore, we focused on general, scalable principles of the brain that can function in large computing systems. Co-location at every level makes sense — staying on the same chip, board, or frame, and generating as little data movement as possible.

The design also explains the machine’s energy efficiency. Prof. Meiler emphasized: “The clusters we currently use for artificial intelligence consume enormous amounts of energy, which is becoming a burden. This cluster is not only larger and faster, but also highly energy-efficient.”

Prof. Mayr added: “For standard language models, we are currently about 18 times more energy-efficient and three times faster than an NVIDIA board. And NVIDIA systems are specifically optimized for these models.”

What Makes the Supercomputer Important for Drug Discovery?

Prof. Mayr: “The brain does more than perform AI-like computations; it also performs probabilistic computing, in which randomness plays a role in optimization. In the end, we approach drug development somewhat like quantum computing does, using physics-based models. On GPUs, drug discovery tends to be more AI-driven.

Here, we can combine both approaches. We use highly accurate physical models and highly accurate AI models and, thanks to brain-inspired design, we can implement them more efficiently and quickly.”

Which AI Applications Are We Talking About?

I asked whether this involved Large Language Models (LLMs) like Claude or Perplexity.

Prof. Meiler: “We can train LLMs on this system. These large language models are widely discussed at the moment, but they represent only one form of artificial intelligence. The system is highly flexible, and we will use it for LLMs and many other applications. There is a broad spectrum of AI methods, and this hardware is fundamentally designed to support a wide variety of AI models.”

How Close Is Hardware to the Brain?

“If you think about it, when we eat a banana or an apple in the morning, the brain runs on very little energy. We’re slowly approaching the point where we can build systems of this scale. But even SpiNNaker requires much more power than the human brain, although it’s significantly more efficient than conventional systems.”

Prof. Mayr added: “We are roughly halfway between GPUs and the brain. SpiNNaker2 is about 10³ orders of magnitude more efficient than GPUs, but still 10³ orders of magnitude away from the brain. We’re not there yet. I have enormous respect for the brain — it’s incredibly efficient.”

SpiNNaker2 will be used in both research and teaching. Students will learn to program their scientific problems to run on this hardware. Support for university spin-offs is also part of the plan.

An existing example is the start-up “Artificial Intelligence Driven Therapeutics,” previously presented at the MACHN Festival. Prof. Meiler emphasized: “For the region, this is a major opportunity—not only to educate excellent students, but potentially to establish 10, 20, or 30 such start-ups in the coming years, working with the outstanding students we have here.”

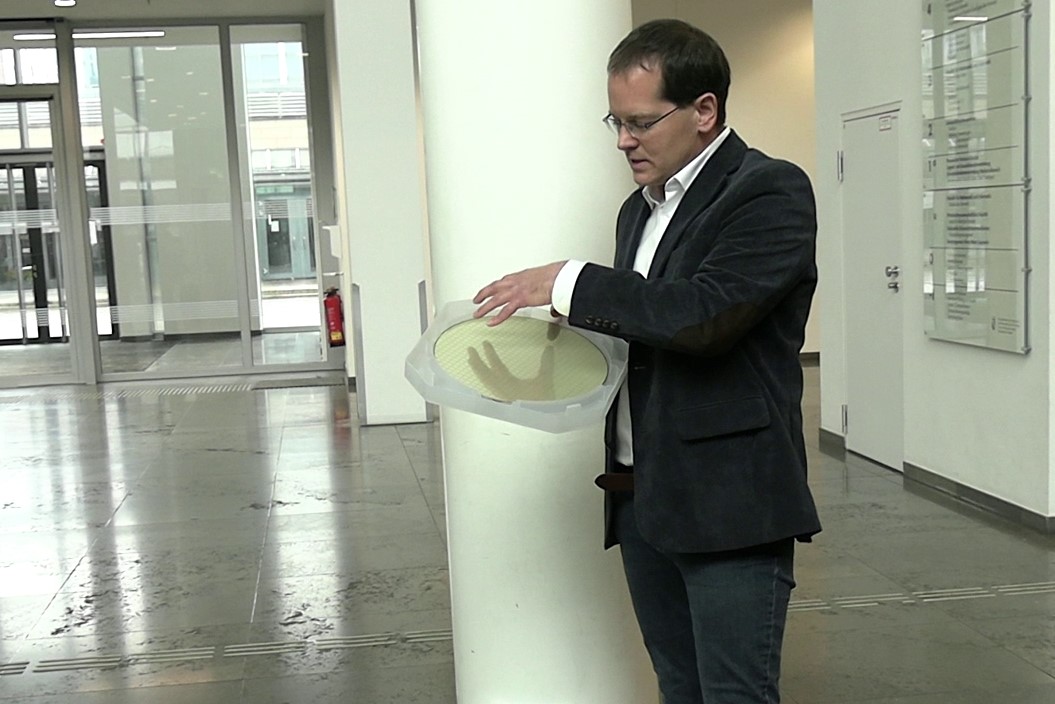

What Does SpiNNaker2 Look Like?

In the server room, as expected, the environment was loud, and SpiNNaker2 resembled a standard server system: many cables, blinking lights. Visually spectacular? Not really. But seeing it up close was impressive nonetheless.

In the end, SpiNNaker2 represents a major step forward for university research, teaching, and the Leipzig tech ecosystem. All that remains is to wish the team continued success and follow the project’s progress in the years ahead.

Empfohlen auf LZ

So können Sie die Berichterstattung der Leipziger Zeitung unterstützen:

There is one comment